Dive Brief:

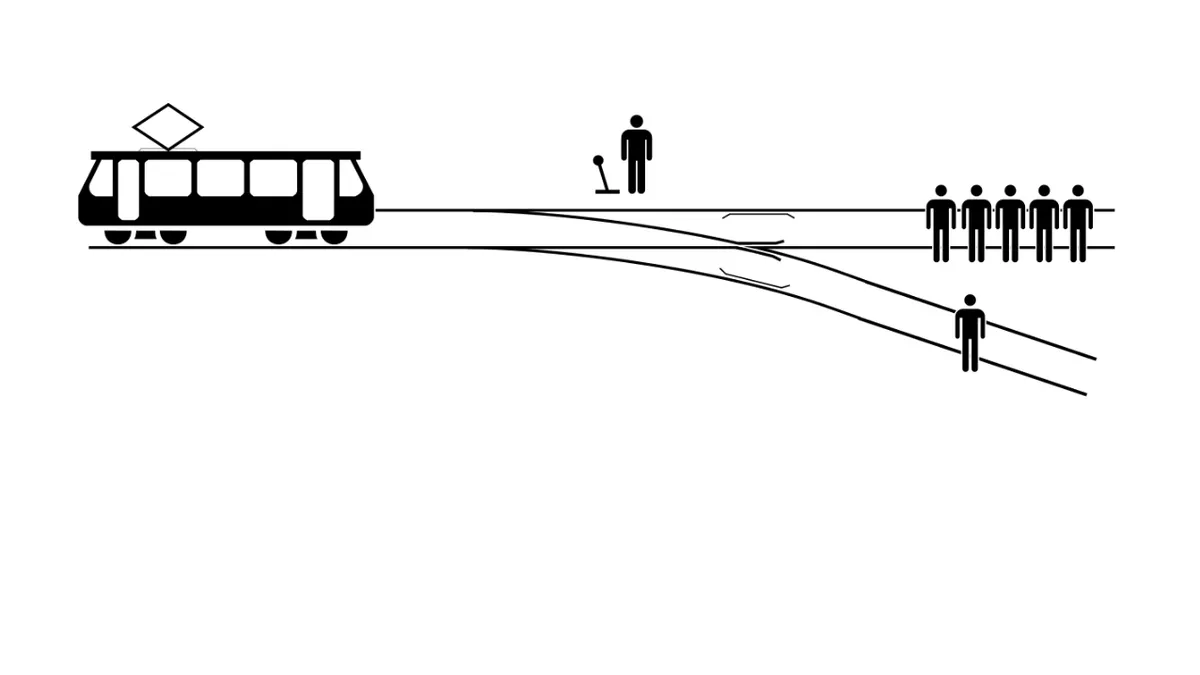

- As people debate the "trolley problem" for autonomous vehicles (AVs) — or how vehicles should prioritize lives in a collision — a Massachusetts Institute of Technology (MIT) survey found there are no universal answers or agreements to the ethics questions. The survey collected responses from 2 million online participants across more than 200 countries and published the findings in the journal Nature.

- Researchers indicated some large trends, such as people generally in favor of sparing the lives of humans over animals, of saving many people rather than a few, or saving younger people rather than the elderly.

- The responses did show cultural differences along geographic lines, however. For example, people from countries the researchers classified as “eastern” were more likely to favor sparing elderly people, rather than the young.

Dive Insight:

The ethical questions surrounding AVs will be the most difficult for automakers and programmers to solve. They’re most usually defined around the "trolley problem," the classic ethical question that asks whether it is better to collide into several people, or redirect the vehicle to kill just one. For AVs, the question could end up being more than hypothetical, as cars may have to choose between the safety of passengers and bystanders.

The discussion was brought into the fore after the fatal collision between an Uber self-driving car and a pedestrian in Tempe, AZ earlier this year; subsequent reports found that the vehicle’s software detected, but did not stop, for a pedestrian. U.S. Sen. Gary Peters, D-MI, one of the leading legislators working on AVs, has said more work needs to be dedicated to the "moral and ethical decisions" they must handle.

A study from the University of Osnabrück released last July found that it would be possible to program human ethics into an AV, but the question remains which ethics should be programmed. The result will make a significant difference in people’s trust of AVs, even if they will be safer than human drivers.

The MIT study — which collected responses through an online “Moral Machine” — sadly does not offer a clear set of directives that will comfort everyone. Researchers, like lead author and MIT postdoc Edmond Awad said the preferences should be part of the public discussion of AV ethics, but said in a release that it was still an open question of "whether these differences in preferences will matter in terms of people’s adoption of the new technology when [vehicles] employ a specific rule."